Deploying MCP Servers on Edge Devices Boosts AI in Europe

Berlin, Wednesday, 20 August 2025.

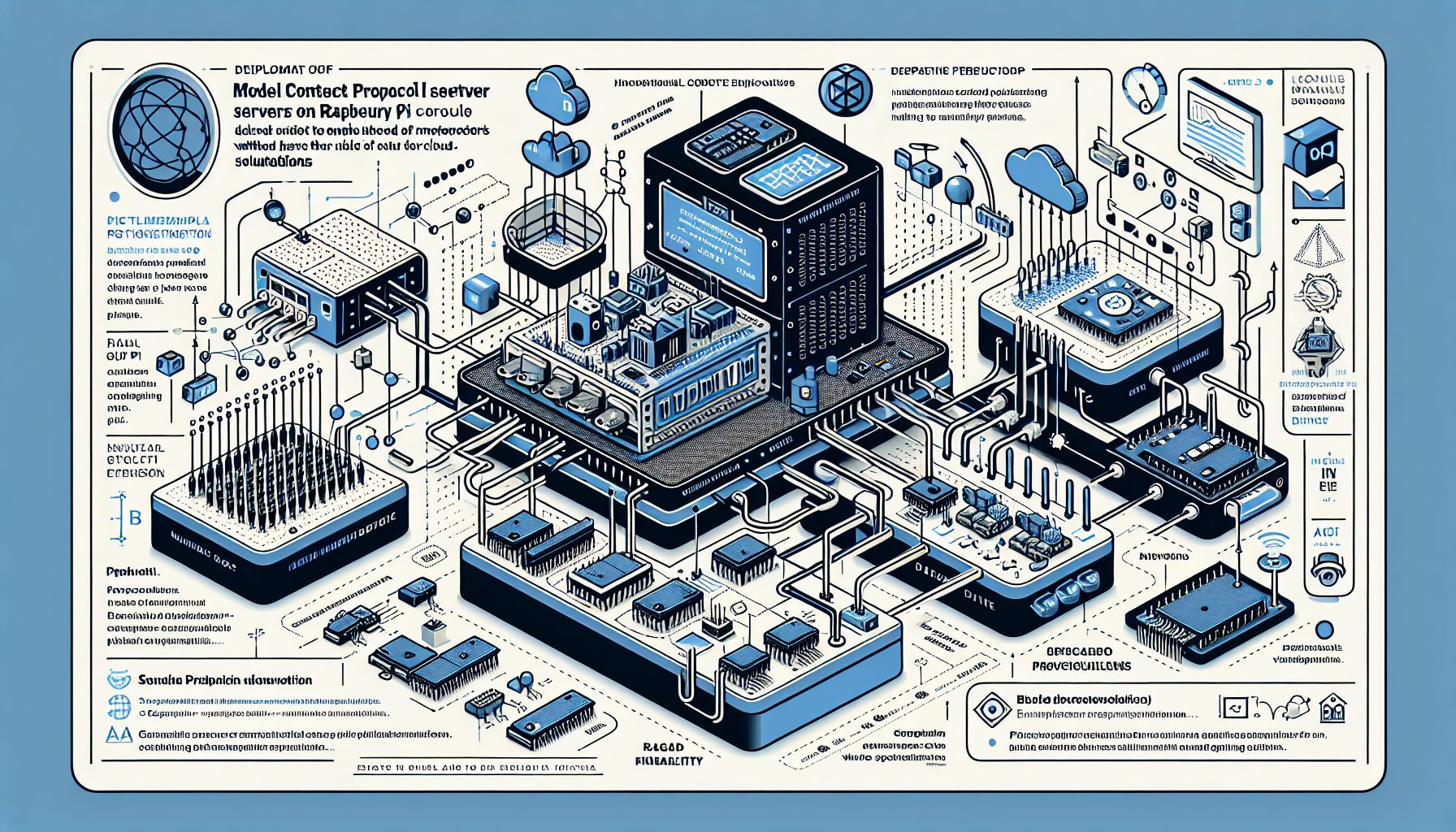

Learn about deploying Model Context Protocol servers on Raspberry Pi and microcontrollers to enable AI workflows without cloud reliance, revolutionizing edge computing across diverse European industries.

Introduction to Model Context Protocol (MCP)

The deployment of Model Context Protocol (MCP) servers on edge hardware such as Raspberry Pi and microcontrollers represents a pivotal shift in the way AI workflows are executed across Europe. By installing MCP servers on local devices, the need to rely on cloud infrastructure is significantly reduced. This enhances the ability to process and respond to data in real-time, a necessity for intelligent systems operating in environments with limited connectivity. The initiative particularly benefits sectors engaging in Internet of Things (IoT) applications and smart automation, where operational efficiency and latency are critical [1].

Setting Up MCP on Edge Devices

To deploy MCP on edge devices such as the Raspberry Pi 5, developers must follow a detailed guide that simplifies the process. The MCP servers utilize a bidirectional client-server architecture, adhering to the JSON-RPC 2.0 protocol, enabling seamless interaction between AI agents and local sensors. This setup allows lightweight devices to become intelligent agents that can execute actions like reading sensor data or controlling devices locally rather than depending on cloud servers. The installation process involves integrating tools with metadata for type-safe interactions and might include utilities for debugging and validation [1].

Advanced Features and Integration

The MCP framework offers advanced functionalities such as exposing device I/O as callable methods, making it straightforward for AI agents to perform tasks like ‘read_temperature’ or ‘toggle_relay’. Developers can enhance local MCP server capabilities by utilizing tools like MCP Inspector for debugging and validation. Additionally, integrating external services, such as those by OpenAI’s gpt-oss models, extends the computing and processing power of edge devices without exceeding local hardware limits. These integrations are facilitated by various frameworks compatible with local environments, enabling comprehensive AI solutions [1][2].

Security and Deployment Considerations

Deploying MCP servers at the edge comes with specific security considerations. Implementing threat modeling, access control safeguards, and formal auditing can mitigate the inherent vulnerabilities associated with edge computing. Moreover, local deployment ensures private data processing and faster recovery in case of tool failures. The use of secure channels and SSH access further bolsters the security of deployment, enabling efficient management of AI network infrastructures on edge devices. As the demand for such systems grows, these considerations are crucial to protect IoT environments effectively [1][5].